Machine Learning class note 2 - Linear Regression

I. Linear regression

0. Presentation

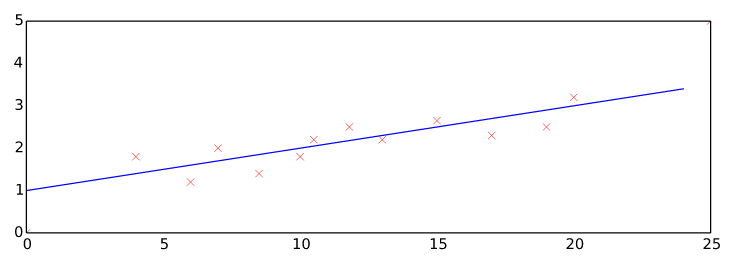

Idea: try to fit the best line to the training sets

1. Hypothesis function

Vectorized form:

Octave implemtation

Prepping by adding an all-one-column in front of X for

h = theta' * X

The line fits best when the distance of our hypothesis to the sample training set is minimum.

Distance from hypothesis to the training set

2. The cost function

Do the above for every sample point we arrive at the cost function below (or square error function or mean squared error)

Octave implementation

J = 1 / (2*m) * sum((X * theta - y).^2)

Vectorized form:

J = 1 / (2*m) * (X*theta - y)' * (X*theta - y);

3. Batch Gradient Descent algorithm

To find out the value of when is min, we can use batch Gradient descent rule below:

Repeat until convergence

and if we take the partial derivative of respect to we have:

Repeat until convergence

Octave implementation

for feature = 1:size(X, 2)

theta(feature) = theta(feature) - alpha*(1/m) * sum((X*theta-y) .* X(:,feature));

end

Vectorized form:

Octave implementation

theta = theta - alpha*(1/m) * (X' * (X*theta-y));

Notes on using gradient descent

Gradient descent need many iteration and works well for number of features n > 10,000

Complexity of

Choosing alpha:

Should step 0.1, 0.3, 0.6, 1 …

Feature normalization

To make gradient descent converges faster we can normalize the data a bit

where mu_i is the average of all the value of feature i

s_i is the range of value or the standard deviation of feature i

After having theta, we can plug X and theta back in hypothesis function to find out the prediction

4. Adding Regularization parameter

Why Regularization ?

The more features introduced, the higher chances that overfitting happens. To address overfitting, we can reduce the number of features or use regularization:

- Keep all the features, but reduce the magnitude of parameters θj.

- Regularization works well when we have a lot of slightly useful features.

How? Adding regularization parameter changing:

Regularized cost function:

Regularized Gradient Descent:

We will modify our gradient descent function to separate out from the rest of the parameters because we do not want to penalize .

Repeat

{

}

5. Normal equation

There is another way to minimize . This is the explicitly way to compute value of without resorting to an iterative algorithm.

Sometimes is non-invertable. Some of the reasons include existence of redundant features or too many features . We should recude number of feature or switch to gradient descent in such case.

Octave implementation

theta = (X' * X)^(-1) * X' * y;

Notes on using Normal equation

No need to choose alpha.

No need to run many iterations.

Feature scaling is also not necessary.

Complexity of since we need to calculate inverse of or pinv(X'* X)

Close of n is very large, should switch to gradient descent.

We can also apply regularization to the normal equation

Recall that if , and may be non-invertible if then is non-invertible. However, when we add the term , then becomes invertible.